- Published on

Enhancing Chat Application Architecture with Terraform and Kubernetes - Part 2

- Authors

- Name

- Geonhyuk Im

- @GeonHyuk

Introduction

In the previous blog post, I outlined the architecture of the Blabber-Hive chat application and discussed the migration plan of the server to a Kubernetes cluster using Terraform.

In this blog post, I will continue the discussion by migrating the server from Docker Compose to a local Kubernetes cluster.

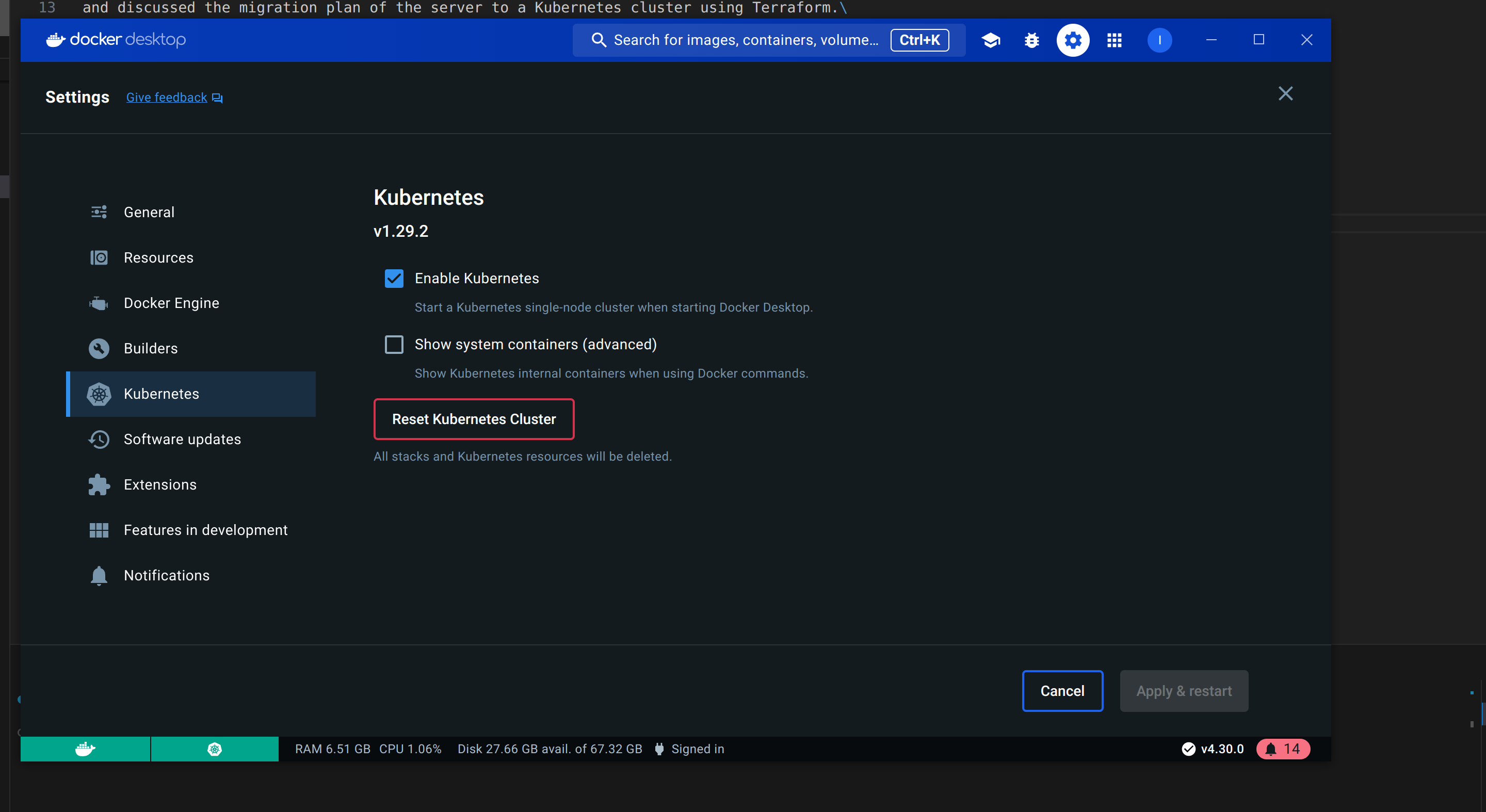

Kubernetes Cluster Setup

I used Docker Desktop's built-in Kubernetes cluster for local development.

In Docker Desktop, Kubernetes uses the name docker-desktop to refer to the default Kubernetes context.

This context is configured to use localhost for accessing the Kubernetes API server and other services running within the Kubernetes cluster.

So in my case, no additional cluster configuration was needed.

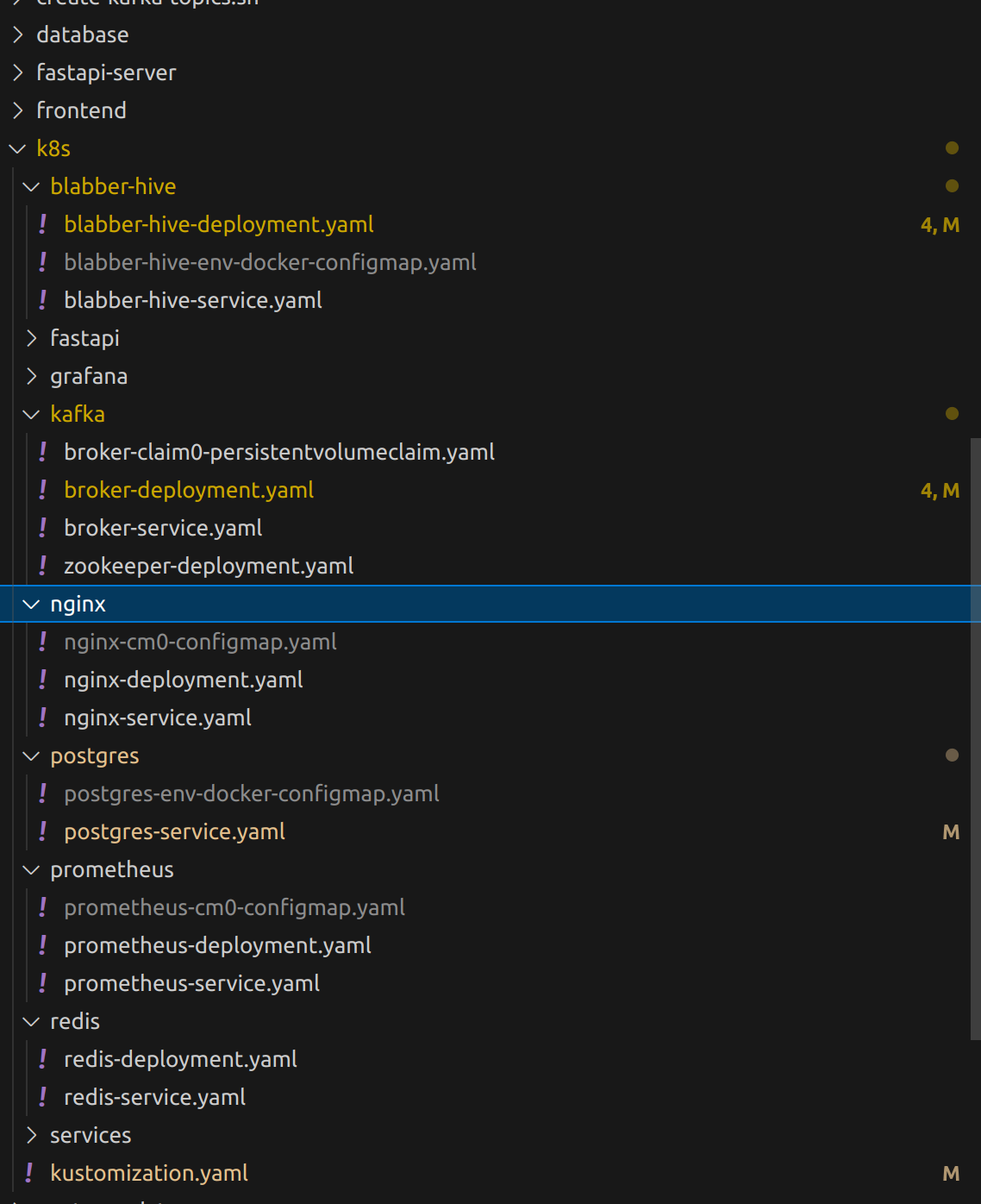

Writing Kubernetes Manifests

In previous blog post, I used Kompose to convert the Docker Compose file to Kubernetes manifests.

The generated manifests were not perfect, but they were a good starting point. Initially, I put manifests into directories like 'deployments', 'services', but I found out it was not maintainable as the number of services increased. So I created each service's directory and put all related manifests into it.

My k8s directory structure looks like this:

root/

k8s/

blabber-hive/

deployment.yaml

service.yaml

fastapi/

deployment.yaml

service.yaml

...

I excluded all configmaps from .gitignore for safety reason since it's public repository.

I also created a Makefile to manage kubectl commands.

Here's the Makefile:

# Makefile

# Run the application

run:

@echo "Setting up the stateful infrastructures..."

@echo "Running the Kubernetes cluster..."

@kubectl apply -k ./k8s

shutdown:

@echo "Shutting down the Kubernetes cluster..."

@kubectl scale --replicas=0 deployment blabber-hive

@kubectl scale --replicas=0 deployment broker

@kubectl scale --replicas=0 deployment fastapi

@kubectl scale --replicas=0 deployment grafana

@kubectl scale --replicas=0 deployment nginx

@kubectl scale --replicas=0 deployment postgres

@kubectl scale --replicas=0 deployment prometheus

@kubectl scale --replicas=0 deployment redis

@kubectl scale --replicas=0 deployment zookeeper

# @echo "Shutting down the stateful infrastructures..."

# @docker compose -f ./docker-compose-k8s.yml down

clean:

@echo "Deleting all Kubernetes resources..."

@kubectl delete all --all

But to use kubectl apply -k, I had to install kustomize.

I installed kustomize from the official website using Go source code. https://kubectl.docs.kubernetes.io/installation/kustomize/source/

And then, I defined kustomizaton.yaml in k8s directory:

# kustomization.yaml

resources:

- blabber-hive/blabber-hive-deployment.yaml

- blabber-hive/blabber-hive-service.yaml

- blabber-hive/blabber-hive-env-docker-configmap.yaml

- fastapi/fastapi-deployment.yaml

- fastapi/fastapi-service.yaml

- grafana/grafana-deployment.yaml

- grafana/grafana-service.yaml

- kafka/broker-claim0-persistentvolumeclaim.yaml

- kafka/broker-deployment.yaml

- kafka/broker-service.yaml

- kafka/zookeeper-deployment.yaml

- nginx/nginx-cm0-configmap.yaml

- nginx/nginx-deployment.yaml

- nginx/nginx-service.yaml

- postgres/postgres-service.yaml

- postgres/postgres-env-docker-configmap.yaml

- prometheus/prometheus-cm0-configmap.yaml

- prometheus/prometheus-deployment.yaml

- prometheus/prometheus-service.yaml

- redis/redis-deployment.yaml

- redis/redis-service.yaml

There's another way to manage kustomization.yaml, which is to create kustomization.yaml file for each subdirectory but I found it more maintainable to put all resources in one file.

Writing Deployments and Services

For each service, I created a deployment and a service manifest.

In Kubernetes, services are used to expose the application to the outside world,

and deployments are used to manage the application's lifecycle.

Deployments are related to pods, which are the smallest deployable units in Kubernetes.

For example, here's the deployment manifest for the chat application server:

apiVersion: v1

kind: Service

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: blabber-hive

name: blabber-hive

spec:

type: NodePort

ports:

- name: "8080"

port: 8080

targetPort: 8080

nodePort: 30080 # Add this line

selector:

io.kompose.service: blabber-hive

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: blabber-hive

name: blabber-hive

spec:

replicas: 1

selector:

matchLabels:

io.kompose.service: blabber-hive

template:

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.network/blabber-hive-blabber-hive: "true"

io.kompose.service: blabber-hive

spec:

containers:

- env:

- name: BULK_INSERT_SIZE

valueFrom:

configMapKeyRef:

key: BULK_INSERT_SIZE

name: blabber-hive-env-docker

- name: BULK_INSERT_TIME

valueFrom:

configMapKeyRef:

key: BULK_INSERT_TIME

name: blabber-hive-env-docker

- name: JWT_SECRET

valueFrom:

configMapKeyRef:

key: JWT_SECRET

name: blabber-hive-env-docker

- name: KAFKA_BROKER_URL

valueFrom:

configMapKeyRef:

key: KAFKA_BROKER_URL

name: blabber-hive-env-docker

...

image: blabber-hive:v1

name: blabber-hive

ports:

- containerPort: 8080

protocol: TCP

restartPolicy: Always

With this deployment manifest, I could access the chat application server at localhost:30080.

I also created similar deployment and service manifests for other services like FastAPI, Nginx, and Zookeeper.

apiVersion: v1

kind: Service

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: fastapi

name: fastapi

spec:

type: NodePort

ports:

- name: '8000'

port: 8000

targetPort: 8000

nodePort: 30000

selector:

io.kompose.service: fastapi

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: fastapi

name: fastapi

spec:

replicas: 1

selector:

matchLabels:

io.kompose.service: fastapi

template:

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.network/blabber-hive-blabber-hive: 'true'

io.kompose.service: fastapi

spec:

containers:

- env:

- name: TZ

value: Asia/Seoul

image: sentiment-analysis:v1

name: sentiment-analysis-server

ports:

- containerPort: 8000

hostPort: 8000

protocol: TCP

restartPolicy: Always

Nginx Deployment

apiVersion: v1

kind: Service

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: nginx

name: nginx

spec:

type: NodePort # Add this line

ports:

- name: '8001'

port: 8001

targetPort: 80

nodePort: 30001 # Add this line

selector:

io.kompose.service: nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

io.kompose.service: nginx

strategy:

type: Recreate

template:

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.network/blabber-hive-blabber-hive: 'true'

io.kompose.service: nginx

spec:

containers:

- image: nginx:latest

name: nginx

ports:

- containerPort: 80

hostPort: 8001

protocol: TCP

volumeMounts:

- mountPath: /etc/nginx/nginx.conf

name: nginx-cm0

subPath: nginx.conf

restartPolicy: Always

volumes:

- configMap:

items:

- key: nginx.conf

path: nginx.conf

name: nginx-cm0

name: nginx-cm0

Dealing with Stateful Services

Kompose generated all the necessary manifests as Deployment,

but for stateful applications like databases or message brokers,

it is recommended to use StatefulSet instead of Deployment.

Here's the summary of each type of Kubernetes resource:

Deployments

- Purpose: Manage stateless applications.

- Features: Rolling updates for zero-downtime. Easy scaling. Self-healing capabilities. Declarative configuration.

StatefulSets

- Purpose: Manage stateful applications.

- Features: Stable, unique pod identifiers. Ordered deployment and scaling. Persistent storage with PersistentVolumeClaims (PVCs). Stable network identities.

ConfigMaps

- Purpose: Manage configuration data separately from application code.

- Features: Store key-value pairs.

- Consume as environment variables, command-line arguments, or mounted volumes.

Dynamic reconfiguration without rebuilding images.

DaemonSets

- Purpose: Ensure a copy of a pod runs on all (or some) nodes.

- Use Case: Background tasks like log collection and monitoring.

Jobs and CronJobs

- Jobs: Run tasks to completion a specified number of times.

- CronJobs: Schedule Jobs to run at specific times.

ReplicaSets

- Purpose: Ensure a specified number of pod replicas are running.

- Note: Used by Deployments under the hood but can be used directly.

PersistentVolume (PV) and PersistentVolumeClaim (PVC)

- PV: Provisioned storage in the cluster.

- PVC: User requests for storage. Pods use PVCs to claim storage resources.

So in short, I had to convert the Deployment manifest to StatefulSet for stateful services like Postgres and Kafka.

Here's the StatefulSet manifest for Postgres:

---

apiVersion: v1

kind: Service

metadata:

name: postgres-nodeport

spec:

type: NodePort

ports:

- port: 5432

targetPort: 5432

nodePort: 32222

selector:

app: blabber-hive

tier: postgres

---

apiVersion: v1

kind: Service

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

app: blabber-hive

tier: postgres

io.kompose.service: postgres

name: postgres

spec:

ports:

- port: 5432

selector:

io.kompose.network/blabber-hive-blabber-hive: 'true'

io.kompose.service: postgres

# Do not use this clusterIP: None

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: postgres

name: postgres

spec:

serviceName: postgres

replicas: 1

selector:

matchLabels:

io.kompose.service: postgres

app: blabber-hive

tier: postgres

# strategy: # Not need to specify the strategy for StatefulSet

# type: Recreate

template:

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.network/blabber-hive-blabber-hive: 'true'

io.kompose.service: postgres

app: blabber-hive

tier: postgres

spec:

containers:

- env:

- name: BULK_INSERT_SIZE

valueFrom:

configMapKeyRef:

key: BULK_INSERT_SIZE

name: postgres-env-docker

- name: BULK_INSERT_TIME

valueFrom:

configMapKeyRef:

key: BULK_INSERT_TIME

name: postgres-env-docker

- name: JWT_SECRET

valueFrom:

configMapKeyRef:

key: JWT_SECRET

name: postgres-env-docker

- name: KAFKA_BROKER_URL

valueFrom:

configMapKeyRef:

key: KAFKA_BROKER_URL

name: postgres-env-docker

- name: POSTGRES_HOST

valueFrom:

configMapKeyRef:

key: POSTGRES_HOST

name: postgres-env-docker

- name: POSTGRES_PASSWORD

valueFrom:

configMapKeyRef:

key: POSTGRES_PASSWORD

name: postgres-env-docker

- name: POSTGRES_USERNAME

valueFrom:

configMapKeyRef:

key: POSTGRES_USERNAME

name: postgres-env-docker

- name: REDIS_URL

valueFrom:

configMapKeyRef:

key: REDIS_URL

name: postgres-env-docker

- name: SUPABASE_AUTH

valueFrom:

configMapKeyRef:

key: SUPABASE_AUTH

name: postgres-env-docker

- name: SUPABASE_DOMAIN

valueFrom:

configMapKeyRef:

key: SUPABASE_DOMAIN

name: postgres-env-docker

- name: SUPABASE_POSTGRES_PASSWORD

valueFrom:

configMapKeyRef:

key: SUPABASE_POSTGRES_PASSWORD

name: postgres-env-docker

- name: SUPABASE_SECRET_KEY

valueFrom:

configMapKeyRef:

key: SUPABASE_SECRET_KEY

name: postgres-env-docker

image: postgres:15.5-alpine

name: blabber-hive-postgres

ports:

- containerPort: 5432

hostPort: 5432

protocol: TCP

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumes:

- name: postgres-data

hostPath:

path: /home/geonhyuk/Documents/CS/Projects/blabber-hive/database

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

I had to remove ClusterIp: None from the service manifest and add a PersistentVolumeClaim (PVC) to the StatefulSet manifest.

With ClusterIp: None, the service is not exposed outside the cluster, so I had to remove from the service manifest to make Postgres accessible from other services like the chat application server.

For Kafka, I also had to convert the Deployment manifest to StatefulSet.

And I had troubles with creating the Kafka topics automatically. I tried a couple of hours to execute the script inside the Kafka container, but it somehow didn't work. so I decided to create the Kafka topics manually.

apiVersion: v1

kind: Service

metadata:

name: zookeeper

spec:

selector:

io.kompose.service: zookeeper

ports:

- port: 2181

targetPort: 2181

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: zookeeper

name: zookeeper

spec:

replicas: 1

selector:

matchLabels:

io.kompose.service: zookeeper

template:

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.network/blabber-hive-blabber-hive: 'true'

io.kompose.service: zookeeper

spec:

containers:

- env:

- name: ZOOKEEPER_CLIENT_PORT

value: '2181'

- name: ZOOKEEPER_TICK_TIME

value: '2000'

image: confluentinc/cp-zookeeper:7.4.3

name: blabber-hive-zookeeper

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: broker

name: broker

spec:

ports:

- name: '9092'

port: 9092

targetPort: 9092

selector:

io.kompose.service: broker

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.service: broker

name: broker

spec:

serviceName: broker

replicas: 1

selector:

matchLabels:

io.kompose.service: broker

template:

metadata:

annotations:

kompose.cmd: kompose convert

kompose.version: 1.33.0 (HEAD)

labels:

io.kompose.network/blabber-hive-blabber-hive: 'true'

io.kompose.service: broker

spec:

containers:

- env:

- name: KAFKA_ADVERTISED_LISTENERS

value: PLAINTEXT://broker:9092,PLAINTEXT_INTERNAL://broker:29092

- name: KAFKA_BROKER_ID

value: '1'

- name: KAFKA_LISTENERS

value: PLAINTEXT://0.0.0.0:9092,PLAINTEXT_INTERNAL://0.0.0.0:29092

- name: KAFKA_LISTENER_SECURITY_PROTOCOL_MAP

value: PLAINTEXT:PLAINTEXT,PLAINTEXT_INTERNAL:PLAINTEXT

- name: KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR

value: '1'

- name: KAFKA_TRANSACTION_STATE_LOG_MIN_ISR

value: '1'

- name: KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR

value: '1'

- name: KAFKA_ZOOKEEPER_CONNECT

value: zookeeper:2181

image: confluentinc/cp-kafka:7.4.3

name: blabber-hive-broker

ports:

- containerPort: 9092

hostPort: 9092

protocol: TCP

volumeMounts:

- mountPath: /wait-for-it.sh

name: broker-claim0

restartPolicy: Always

volumes:

- name: broker-claim0

persistentVolumeClaim:

claimName: broker-claim0

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

labels:

io.kompose.service: broker-claim0

name: broker-claim0

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100Mi

This enabled me to access the Kafka broker container using the command:

kubectl exec -it {$BrokerPodName} -- /bin/bash

And create the Kafka topics manually using the command:

KAFKA_BROKER="broker:9092”

/usr/bin/kafka-topics --create --bootstrap-server $KAFKA_BROKER --topic messages --partitions 3 --replication-factor 1

Conclusion and Next Steps

After these steps, finally I port-forwarded the Nginx service to localhost:8001

kubectl port-forward svc/nginx 8001:8001

And my frontend running outside the cluster and running on port 3000 could access the chat application server running inside the cluster, passing through the Nginx reverse proxy.

Nginx configuration remained the same as the Docker Compose version, so I didn't have to change anything.

worker_processes 1;

events {

worker_connections 1024;

}

http {

upstream blabber-hive {

server blabber-hive:8080;

}

upstream fastapi {

server fastapi:8000;

}

server {

listen 80;

server_name localhost;

location /api/ {

proxy_pass http://blabber-hive;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# Prevent Nginx from intercepting 401 and 403 responses

proxy_intercept_errors off;

error_page 401 403 = @handle_errors;

}

# Custom location block to handle 401 and 403 responses

location @handle_errors {

# Forward the original response from the upstream server

proxy_pass http://blabber-hive;

proxy_intercept_errors off;

}

# WebSocket location block

location /ws/ {

proxy_pass http://blabber-hive/ws/;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

#proxy_set_header X-Real-IP $remote_addr;

#proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

location /ml/api/ {

proxy_pass http://fastapi/api/;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

}

But Prometheus and Grafana were not accessible from the chat application server.

I will continue to work on this issue in the next blog post.

In next blog post, I will probably discuss how to set up EKS cluster on AWS and deploy the applications to the cloud environment.